We're in the

business of

building

businesses.

We are in the business of building businesses so everyone can thrive whoever and wherever you are.

Our flagship global events will always be our pride and focus, but we're now building on these, creating year-round communities with shared passions and purpose, designed to help businesses and people grow continually.

+Events

Industry Sectors

Countries

Goal Year To Net Zero

ITS America Conference & Expo

The Americas » United States > Phoenix

22nd - 25th April 2024

Phoenix Convention Center

Hybrid

INTERTOOL

Europe » Austria > Wels

23rd - 26th April 2024

Messe Wels

Hybrid

SCHWEISSEN

Europe » Austria > Wels

23rd - 26th April 2024

Messe Wels

Hybrid

Electronics Manufacturing Korea

Asia » South Korea > Seoul

24th - 26th April 2024

COEX

NEPCON China

Asia » China > Shanghai

24th - 26th April 2024

Shanghai World Expo Exhibition & Convention Center

Hybrid

Automotive World Korea

Asia » South Korea > Seoul

24th - 26th April 2024

COEX

S-Factory Expo

Asia » China > Shanghai

24th - 26th April 2024

Shanghai World Expo Exhibition and Convention Center

SPEXA – Space Business Expo

Asia » Japan > Tokyo

24th - 26th April 2024

Tokyo Big Sight

Software & Apps Development Expo [Spring]

Asia » Japan > Tokyo

24th - 26th April 2024

Tokyo Big Sight

Sales DX Expo [Spring]

Asia » Japan > Tokyo

24th - 26th April 2024

Tokyo Big Sight

Embedded & Edge Computing Expo [Spring]

Asia » Japan > Tokyo

24th - 26th April 2024

Tokyo Big Sight

IT Operation Management & Data Cente Expo [Spring]

Asia » Japan > Tokyo

24th - 26th April 2024

Tokyo Big Sight

Information Security Expo [Spring]

Asia » Japan > Tokyo

24th - 26th April 2024

Tokyo Big Sight

Digital Marketing Expo [Spring]

Asia » Japan > Tokyo

24th - 26th April 2024

Tokyo Big Sight

Cloud & BPR Expo [Spring]

Asia » Japan > Tokyo

24th - 26th April 2024

Tokyo Big Sight

IoT Solutions Expo [Spring]

Asia » Japan > Tokyo

24th - 26th April 2024

Tokyo Big Sight

Advanced E-Commerce & Retail Expo [Spring]

Asia » Japan > Tokyo

24th - 26th April 2024

Tokyo Big Sight

AI & Business Automation Expo [Spring]

Asia » Japan > Tokyo

24th - 26th April 2024

Tokyo Big Sight

Metaverse Expo [Spring]

Asia » Japan > Tokyo

24th - 26th April 2024

Tokyo Big Sight

Data Driven Management Expo [Spring]

Asia » Japan > Tokyo

24th - 26th April 2024

Tokyo Big Sight

The 32nd China (Shenzhen) International Gifts, Handicrafts, Watches & Houseware Fair

Asia » China > Shenzhen

25th - 28th April 2024

Shenzhen World Exhibition & Convention Center

The 8th Shenzhen Gifts, Consumer Goods Packaging & Printing Fair

Asia » China > Shenzhen

25th - 28th April 2024

Shenzhen World Exhibition & Convention Center

.png)

Expomed Eurasia

Europe » Türkiye > Istanbul

25th - 27th April 2024

Tuyap Fair Convention & Congress Center

The 13th China Mobile Electronics Fair

Asia » China > Shenzhen

25th - 28th April 2024

Shenzhen World Exhibition & Convention Center

Be YOU.

We thrive on the rich tapestry of backgrounds, ideas, and experiences that make us who we are. Inclusivity isn't a buzzword; it's our commitment.

Lending a hand.

At RX we strive to make the world around us better through our dedication to sustainability, innovation and support to our local communities

Stay in the loop.

Uncover the latest buzz and behind-the-scenes tales in our press releases and RX stories. From groundbreaking innovations to the faces behind our success, each story is a chapter in our vibrant narrative

Net Zero

by 2040!

At RX we are serious about sustainability, which is why we signed the ‘Net Zero Carbon Events' pledge in 2021 and have formed a dedicated Sustainability Council.

By 2030, we aim to cut our greenhouse gas emissions in half and achieve net zero by 2040 and we’ve already made a good start!

Become an RXer.

Whether you're a seasoned pro or just starting out, we provide avenues for personal and professional development. If you're ambitious, committed, and excited to make a difference, we can't wait to connect with you. Join our team and be part of the RX magic🚀

The RX values.

At RX, we live by our NIMBLE culture, fueled by six core values: Networked, Inclusive, Magical, Love of Learning, and Entrepreneurial. These values not only keep us on track but also unite us in pursuit of shared goals.

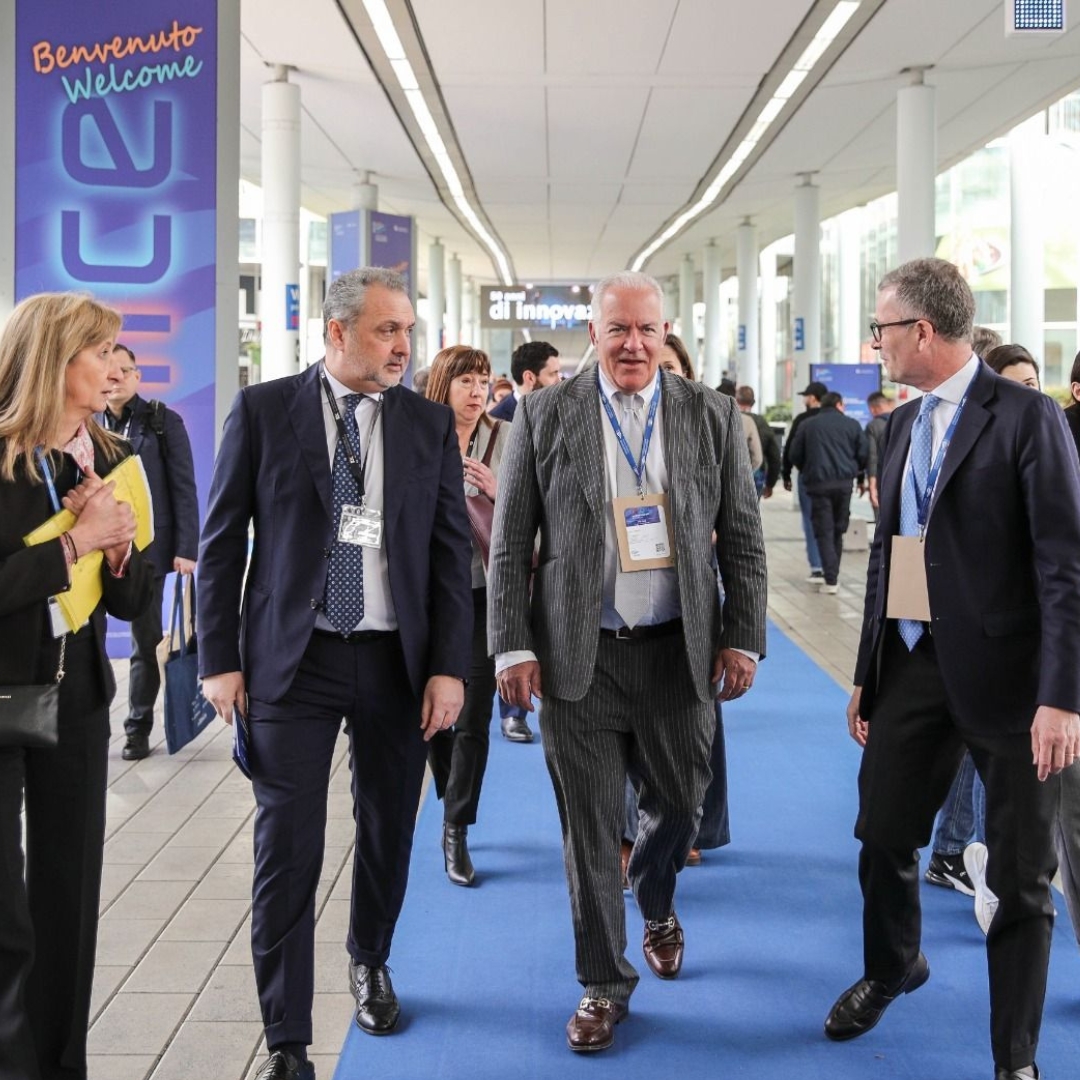

Meet our leaders.

Our dynamic team of experienced and visionary leaders thrives on a collective passion for our business. United, we ignite a blend of expertise and creativity to fuel innovation and conquer our objectives.

RXtra.

Keep up to date with the latest RX and event news with our Linkedin Newsletter.